Developing an AI for Pokemon GO Trainer Battles

The site now features Training Battles, real-time battle simulations against an AI opponent! This was a huge endeavor and I was really excited to create an engaging practice tool that people could use anytime, anywhere. Pokemon GO Trainer Battles have a lot of complexity - from team compositions to in-game battle tactics like baiting shields and switching. Developing an AI to play the game might seem like a daunting task, but there are two core systems I designed to keep it elegant and engaging at the same time.

Jump to a section:

Design Goals

When I set out to create the AI, I primarily wanted to design an adversary that would play like a human opponent, and that you would beat the same way you would beat a human player. Especially when it comes to difficulty, this meant following a few bullet points:

- The AI needs to employ strategies and thinking that real players do

- The AI shouldn’t have information a human opponent wouldn’t (don’t make it omniscient about the player’s team selection or move usage)

- Difficulty must be exclusively through the AI’s behavior and not through artificial means like inflating Pokemon CP

- It’s okay for the AI to make mistakes

Team GO Rocket battles have proven to be a challenge in their own right, for example, but I knew for this project it was vital to make the AI beatable in the same way you’d beat a human player. That is, I didn’t want to introduce any qualities or quirks that players would adapt to in order to beat the AI, that wouldn’t be relevant in actual Trainer Battles. I also didn’t want the AI to be outright unbeatable or infallible; if a player recognizes the AI has made a mistake, that’s still a learning moment.

In order to accomplish this, I knew I needed to do the following:

- Allow the AI to assign long-term strategies that alter its default behavior

- Give it a means to evaluate matchups in order to determine those strategies

One of my first steps was to catalogue as many player strategies as I could and organize them in an algorithmic way (“if this, do that”). If my opponent is low on HP, let’s faint them down and farm some energy. If my opponent is about to get a Charged Move that will be bad for me, let’s duck out of there and absorb it with another Pokemon.

Below is a table of some example strategies or traits, and the difficulty levels that employ them:

| Novice | Rival | Elite | Champion | |

| Shielding | ✔ | ✔ | ✔ | ✔ |

| 2 Charged Moves | ✔ | ✔ | ✔ | |

| Basic Switching | ✔ | ✔ | ✔ | |

| Energy Farming | ✔ | ✔ | ||

| Shield Baiting | ✔ | ✔ | ||

| Advanced Switching | ✔ | |||

| Switch Clock Management | ✔ |

So how is this all implemented? Let’s take a look at the two core systems that make the AI tick!

Matchup Evaluation

There are so many variables to take into account when considering matchups, from typing to shields to energy. Practiced players will know the relevant matchups and what beats what.

Beating at the AI’s heart is the simulator you already know. It pits Pokemon with their current HP and energy against each other to obtain approximate knowledge of current or potential matchups. When it does this, the AI runs four different scenarios:

- Both Bait: Will I win this if my opponent and I successfully bait shields?

- No Bait: Will I win this without baiting shields?

- Neither Bait: Will I win this if neither of us bait shields?

- Farm: Will I win this using Fast Moves only?

If these scenarios look good, the AI will stick it out and decide on a strategy to try and win the matchup. If these scenarios don’t look good, it’ll look for an opportunity to switch.

The AI performs matchup evaluation at a few specific moments:

- During team selection in Tournament Mode to evaluate best picks and counter picks

- At the beginning of the game

- After either player switches

- After each Charged Move

This results in a tendency for the AI to switch after Charged Moves, which lines up with real player behavior of queuing a switch during Charged Moves. Evaluating matchups on every turn was also a possibility but resulted in erratic behavior; here the AI is better able to commit to a strategy.

Decision Making

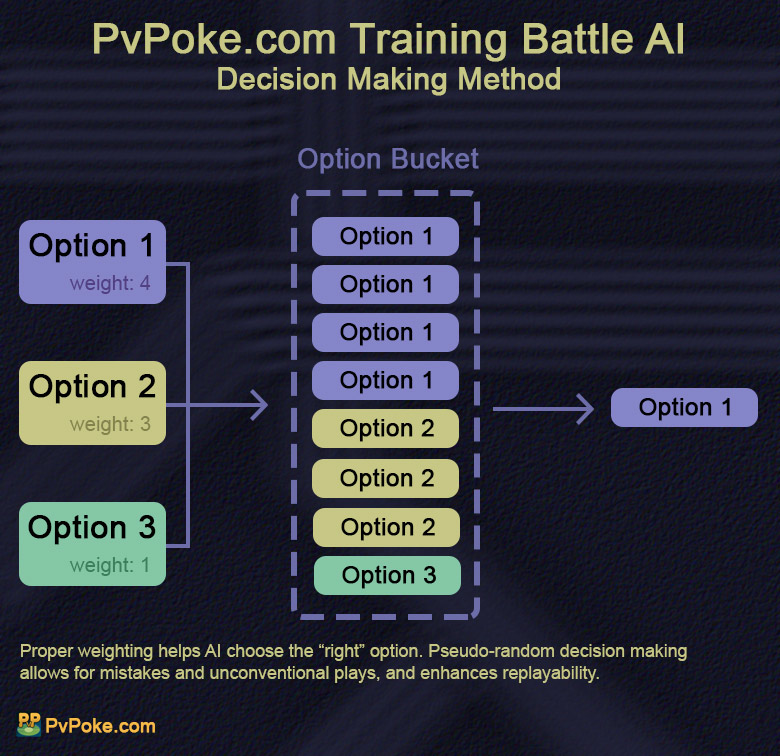

Once the AI has gathered all of this information, how does it decide what to do? The AI’s second core system is here to help! Everything the AI chooses or does originates from a pseudo-random decision making function. Simply put, this function acts as a lottery for different choices, and the AI’s available information determines each options’ weight, or how many times that option entered into the “drawing”.

As shown above, the AI will randomly choose between several options, and the likelihood of selecting any particular option depends on its weight value. The AI adjusts these weight values to help itself make the “right” choice but the door is always open for something unconventional.

This pseudo-randomness was an important part of my design goals - I didn’t want the AI to always play the same matchups the same way, and I wanted to add a touch of unpredictability. With this system, the AI is open to mistakes and misplays, while at the same time capable of stumbling backwards into genius that wouldn’t be possible in a more rigid decision-making system.

Literally everything the AI does passes through this system - from roster picks to choosing strategies, switches, or whether or not to shield incoming attacks.

Team Selection

If you’re trying to make a challenging AI, you also need to give it a challenging team. There are a few different ways I could have gone about this - one simple solution, for example, would be to make a list of preset teams for the AI to pick from. This would have been fine, but I wanted a high amount of a variability so players can really sharpen their own picking skills. How do you generate random teams that are also balanced and competitive, and vary by difficulty?

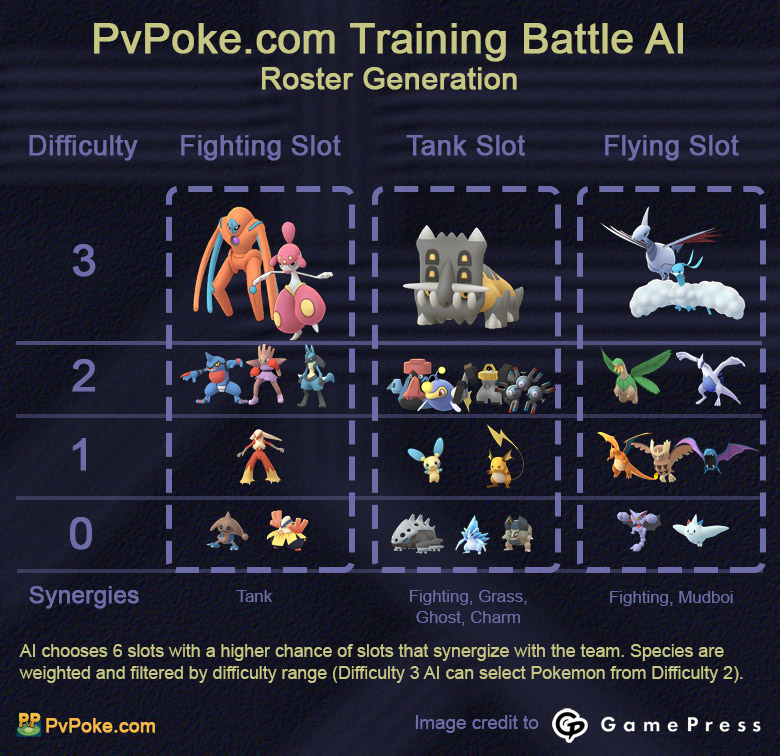

The answer I went with is a slot system. Picks are categorized into several slots (“Tank”, “Grass,” Mudboi,” etc.), and the AI uses the pseudo-random decision making described above to select a slot and then select a Pokemon within that slot. Once a slot and Pokemon are picked, they can’t be picked again, and the AI repeats this until all 6 of its roster spots are filled.

Each slot also has synergies with other slots. When a “Mudboi” is selected, for example, the AI is more likely to pick something from the “Flying” slot to help cover the Grass weakness.

The last element to consider is difficulty. At lower difficulties, the AI’s picks more off-meta things or thrifty versions of meta counterparts. Each Pokemon within a slot is assigned a difficulty level, and the AI will only select it if the Pokemon’s difficulty level is within 1 of its own. For example a level 3 AI (“Champion”) will only select Pokemon that belong to difficulty 2 or 3, while a level 0 AI (“Novice”) will only select Pokemon that belong to difficulty 0 or 1. This allows for a range of pick options while maintaining good picks for the higher difficulties and less optimal picks for the lower difficulties.

Selecting a roster is one thing, but how about selecting a team? Players have pick strategies that can be defined in a few categories. This Reddit post by C9Gotem goes in depth about different tiers of thinking involved in pick strategies, and was a helpful reference as I worked on this part of the AI.

In Tournament Mode, the AI can employ the following pick strategies:

- Basic: The AI chooses an ordered set three directly from its roster. This is similar to if a player uses a preset team of 3 that they’re well practiced with. Because of the slot synergies, these teams are usually balanced but this strategy can also have some unconventional results.

- Best: The AI leads with the Pokemon that has the most positive matchups against the opponent. The AI then selects a “bodyguard” for the lead to counter its counters, and rounds out the team with a Pokemon that fits well with both.

- Counter: Like the above, but the AI leads with a Pokemon that counters the opponent’s best Pokemon.

- Unbalanced: The AI selects two well-rounded Pokemon and leads with a bodyguard for them both. This strategy aims to produce lineups that overpower typical balanced teams and control the flow of battle.

- Same Team: After a match, the AI will use the same team again. It’s more likely to do this after a win.

- Same Team, Different Lead: After a match, the AI will use the same team again but lead with the previous team’s bodyguard. This has the effect of countering the previous lead’s counter. It’s more likely to do this after a win.

- Counter Last Lead: The AI will lead with its best counter to the opponent’s previous lead. It’s more likely to do this after a loss.

In this way, the AI picks similarly to conventional players and hopefully makes for good practice when it comes to picking in a tournament!

Closing Thoughts

This project was a huge passion and effort. I hope it enhances your enjoyment of the game and helps you develop a winning skillset! Big picture, it's also my hope that these training battles might inspire newcomers to PvP, other developers, and if they should see them, Niantic themselves. Here's hoping for a bright and exciting future!